文章目录 需求需求分析分页问题说明分页问题原因一对多场景一一对多场景二性能优化优化解决方案 需求 查询用户信息列表,其中包含用户对应角色信息,页面检索条件有根据角色名称查询用户列

查询用户信息列表,其中包含用户对应角色信息,页面检索条件有根据角色名称查询用户列表;

一个用户对应多个角色,用户信息和角色信息分表根据用户id关联存储,用户和角色一对多进行表连接查询,

创建对应表:

CREATE TABLE `sys_user` ( `id` bigint NOT NULL AUTO_INCREMENT COMMENT '用户ID', `name` varchar(50) DEFAULT NULL COMMENT '姓名', `age` int DEFAULT NULL COMMENT '年龄', PRIMARY KEY (`id`) USING BTREE) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='用户信息表';CREATE TABLE `sys_role` ( `id` bigint NOT NULL AUTO_INCREMENT COMMENT '角色ID', `role_name` varchar(30) NOT NULL COMMENT '角色名称', PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='角色信息表';CREATE TABLE `sys_user_role` ( `id` bigint NOT NULL AUTO_INCREMENT COMMENT 'ID', `user_id` bigint NOT NULL COMMENT '用户ID', `role_id` bigint NOT NULL COMMENT '角色ID', PRIMARY KEY (`id`)) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='用户和角色关联表';INSERT INTO tsq.sys_user (name,age) VALUES ('张三',18), ('王二',19);INSERT INTO tsq.sys_role (role_name) VALUES ('角色1'), ('角色2'), ('角色3'), ('角色4');INSERT INTO tsq.sys_user_role (user_id,role_id) VALUES (1,1), (1,2), (1,3), (2,4);对应实体类:

@Data@apiModel("用户信息表")@TableName("sys_user")public class User implements Serializable { private static final long serialVersionUID = 1L; @ApiModelProperty("用户id") private Long id; @ApiModelProperty("姓名") private String name; @ApiModelProperty("年龄") private Integer age;}@Data@ApiModel("角色信息表")@TableName("sys_role")public class Role implements Serializable { private static final long serialVersionUID = 1L; @ApiModelProperty("角色id") private Long id; @ApiModelProperty("角色名称") private String roleName;}@Data@ApiModel("用户信息表")public class UserVo implements Serializable { private static final long serialVersionUID = 1L; @ApiModelProperty("用户id") private Long id; @ApiModelProperty("姓名") private String name; @ApiModelProperty("年龄") private Integer age; private List<Role> roleList;}在使用一对多连接查询并且分页时,发现返回的分页列表数据数量不对

比如这里查询用户对应角色列表,如果使用直接映射,那么 roleList 的每个 Role 对象都会算一条数据;比如查第一页,一个用户有三个角色每页三条数据,就会出现查出一个 User ,三个 Role 的这些情况,这它也算每页三条(其实就只查到一个用户)

mybatis-plus一对多分页时,应该使用子查询的映射方式,使用直接映射就会出错

所以直接映射适用于一对一,子查询映射使用于一对多;

查询用户表的内容,角色表不参与条件查询,用懒加载形式

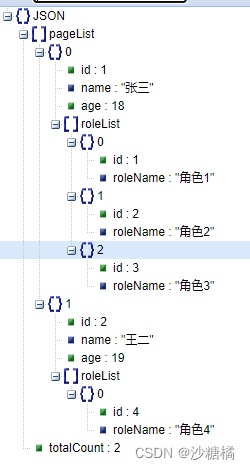

// controller @GetMapping("/pageList")public Map<String, Object> pageList(@RequestParam(required = false, defaultValue = "0") int offset, @RequestParam(required = false, defaultValue = "10") int pagesize) {return userService.pageList(offset, pagesize);} // serviceimpl @Overridepublic Map<String, Object> pageList(int offset, int pagesize) { List<UserVo> pageList = userMapper.pageList(offset, pagesize); int totalCount = userMapper.pageListCount(); Map<String, Object> result = new HashMap<String, Object>(); result.put("pageList", pageList); result.put("totalCount", totalCount); return result;} // mapper.xml <resultMap id="getUserInfo" type="com.tsq.democase.onetomany.domain.vo.UserVo" > <result column="id" property="id" /> <result column="name" property="name" /> <result column="age" property="age" /> <collection property="roleList" javaType="ArrayList" ofType="com.tsq.democase.onetomany.domain.Role" select="getRolesByUserId" column="{userId = id}"/> </resultMap> <select id="getRolesByUserId" resultType="com.tsq.democase.onetomany.domain.Role"> SELECT * FROM sys_user_role ur inner join sys_role r on ur.role_id = r.id where ur.user_id = #{userId} </select> <select id="pageList" resultMap="getUserInfo"> SELECT * FROM sys_user LIMIT #{offset}, #{pageSize} </select> <select id="pageListCount" resultType="java.lang.Integer"> SELECT count(1) FROM sys_user </select>查询结果

查询用户表的内容,角色表要作为查询条件参与查询,例如要根据角色名称查询出用户列表

// controller@GetMapping("/pageListByRoleName")public Map<String, Object> pageListByRoleName(@RequestParam(required = false, defaultValue = "0") int offset, @RequestParam(required = false, defaultValue = "10") int pagesize, @RequestParam String roleName) {return userService.pageListByRoleName(offset, pagesize, roleName);} // serviceimpl@Overridepublic Map<String, Object> pageListByRoleName(int offset, int pagesize,String roleName) { List<UserVo> pageList = userMapper.pageListByRoleName(offset, pagesize, roleName); int totalCount = userMapper.pageListCount(); Map<String, Object> result = new HashMap<String, Object>(); result.put("pageList", pageList); result.put("totalCount", totalCount); return result;} // mapper.xml <resultMap id="getUserInfoByRoleName" type="com.tsq.democase.onetomany.domain.vo.UserVo" > <result column="id" property="id" /> <result column="name" property="name" /> <result column="age" property="age" /> <collection property="roleList" javaType="ArrayList" ofType="com.tsq.democase.onetomany.domain.Role" select="getRolesByUserIdAndRoleName" column="{userId = id,roleName = roleName}"/> </resultMap> <select id="getRolesByUserIdAndRoleName" resultType="com.tsq.democase.onetomany.domain.Role"> SELECT * FROM sys_user_role ur inner join sys_role r on ur.role_id = r.id where ur.user_id = #{userId} <if test="roleName != null and roleName != ''" > and r.role_name LIKE concat('%', #{roleName}, '%') </if> </select> <select id="pageListByRoleName" resultMap="getUserInfoByRoleName"> SELECT temp.* FROM ( SELECT distinct u.*,#{roleName} as roleName FROM sys_user u left join sys_user_role ur on u.id = ur.user_id left join sys_role r on r.id = ur.role_id <where> <if test="roleName != null and roleName != ''" > r.role_name LIKE concat('%', #{roleName}, '%') </if> </where> ) temp LIMIT #{offset}, #{pageSize} </select>查询结果

原因:

场景一二中使用 select方式会触发多次子查询(SELECT *FROM sys_user_role ur inner join sys_role …),当数据量大时会使查询速度很慢。

-- ==> SELECT temp.* FROM ( SELECT distinct u.*, '角色' as roleName FROM sys_user u left join sys_user_role ur on u.id = ur.user_id left join sys_role r on r.id = ur.role_id WHERE r.role_name LIKE concat('%', '角色', '%') ) temp LIMIT 0, 10 -- ====> SELECT * FROM sys_user_role ur inner join sys_role r on ur.role_id = r.id where ur.user_id = 1 and r.role_name LIKE concat('%', '角色', '%') -- ====> SELECT * FROM sys_user_role ur inner join sys_role r on ur.role_id = r.id where ur.user_id = 2 and r.role_name LIKE concat('%', '角色', '%') -- ==> SELECT count(1) FROM sys_user sql可见如果有100各用户就要执行一百次子查询,效率极低。

sql中只查询sys_user相关信息并且做roleName 过滤,roleList在java代码中用stream关联role并赋值roleList;

// serviceimpl @Override public Map<String, Object> pageListByRoleName(int offset, int pagesize,String roleName) { // List pageList = userMapper.pageListByRoleName(offset, pagesize, roleName); List<UserVo> pageList = userMapper.pageListByRoleName2(offset, pagesize, roleName); List<Long> userIds = pageList.stream().map(UserVo::getId).collect(Collectors.toList()); List<UserRoleVo> userRoleVos = userMapper.getUserRoleByUserIds(userIds); Map<Long, List<UserRoleVo>> userRoleMap = userRoleVos.stream().collect(Collectors.groupingBy(UserRoleVo::getUserId, Collectors.toList())); pageList.forEach(u -> { List<UserRoleVo> roleVos = userRoleMap.get(u.getId()); List<RoleVo> roles = BeanUtils.listCopy(roleVos, CopyOptions.create(), RoleVo.class); u.setRoleList(roles); }); int totalCount = userMapper.pageListCount(); Map<String, Object> result = new HashMap<String, Object>(); result.put("pageList", pageList); result.put("totalCount", totalCount); return result; }// mapper.xml<select id="pageListByRoleName2" resultType="com.tsq.democase.onetomany.domain.vo.UserVo"> SELECT distinct u.* FROM sys_user u left join sys_user_role ur on u.id = ur.user_id left join sys_role r on r.id = ur.role_id <where> <if test="roleName != null and roleName != ''" > r.role_name LIKE concat('%', #{roleName}, '%') </if> </where> LIMIT #{offset}, #{pageSize}</select> 查询结果

同场景二。

查询时产生的sql如下:

-- ==> SELECT distinct u.* FROM sys_user u left join sys_user_role ur on u.id = ur.user_id left join sys_role r on r.id = ur.role_id WHERE r.role_name LIKE concat('%', '角色', '%') LIMIT 0, 10 -- ==> SELECT ur.user_id , r.id roleId, r.role_name FROM sys_user_role ur inner join sys_role r on ur.role_id = r.id -- ==> SELECT count(1) FROM sys_user 由sql日志可见这种方式比纯sql方式效率高一些

来源地址:https://blog.csdn.net/weixin_38898423/article/details/127939212

--结束END--

本文标题: Mybatis Plus一对多联表查询及分页解决方案

本文链接: https://lsjlt.com/news/435927.html(转载时请注明来源链接)

有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341

2024-04-01

2024-04-03

2024-04-03

2024-01-21

2024-01-21

2024-01-21

2024-01-21

2023-12-23

回答

回答

回答

回答

回答

回答

回答

回答

回答

回答

0