接上:Http://onlyoulinux.blog.51cto.com/7941460/1554951上文说到用hadoop2.4.1分布式结合hase0.94.23出现大量的报错,没能解决,最后用新版本

接上:Http://onlyoulinux.blog.51cto.com/7941460/1554951

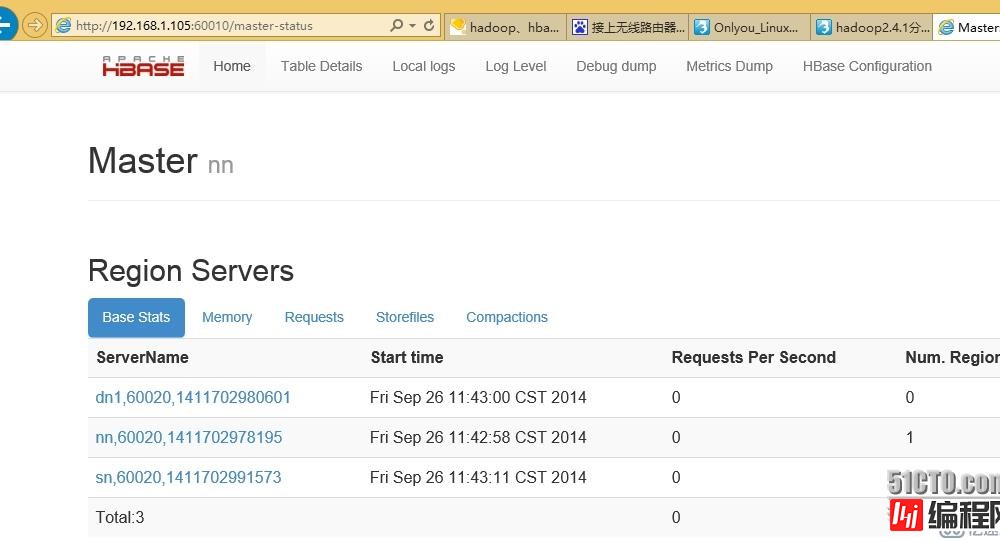

上文说到用hadoop2.4.1分布式结合hase0.94.23出现大量的报错,没能解决,最后用新版本HBase0.96.2同样的配置没遇到报错,个人感觉是版本兼容性问题,报错内容如下:

2014年 09月 22日 星期一 19:56:03 CST Starting master on nn

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 5525

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 5525

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

2014-09-22 19:56:04,681 INFO org.apache.hadoop.hbase.util.VersionInfo: HBase 0.94.23

2014-09-22 19:56:04,681 INFO org.apache.hadoop.hbase.util.VersionInfo: Subversion git://asf911.gq1.ygridcore.net/home/jenkins/jenkin

s-slave/workspace/HBase-0.94.23 -r f42302b28aceaab773b15f234aa8718fff7eea3c

2014-09-22 19:56:04,681 INFO org.apache.hadoop.hbase.util.VersionInfo: Compiled by jenkins on Wed Aug 27 00:54:09 UTC 2014

2014-09-22 19:56:05,072 DEBUG org.apache.hadoop.hbase.master.HMaster: Set serverside HConnection retries=140

2014-09-22 19:56:05,852 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,853 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,854 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,855 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,856 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,857 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,858 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,858 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,859 INFO org.apache.hadoop.ipc.HBaseServer: Starting Thread-1

2014-09-22 19:56:05,863 INFO org.apache.hadoop.ipc.HBaseServer: Starting IPC Server listener on 60000

2014-09-22 19:56:05,889 INFO org.apache.hadoop.hbase.ipc.HBaserpcMetrics: Initializing RPC Metrics with hostName=HMaster, port=60000

2014-09-22 19:56:06,510 INFO org.apache.hadoop.hbase.ZooKeeper.RecoverableZooKeeper: The identifier of this process is 3210@nn

2014-09-22 19:56:06,572 INFO org.apache.zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012

17:52 GMT

2014-09-22 19:56:06,572 INFO org.apache.zookeeper.ZooKeeper: Client environment:host.name=nn

2014-09-22 19:56:06,572 INFO org.apache.zookeeper.ZooKeeper: Client environment:java.version=1.7.0_67

2014-09-22 19:56:06,572 INFO org.apache.zookeeper.ZooKeeper: Client environment:java.vendor=oracle Corporation

2014-09-22 19:56:06,572 INFO org.apache.zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.7.0_67/jre

"logs/hbase-root-master-nn.log" 165L, 30411C 1,1 顶端

at sun.NIO.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:489)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:434)

at org.apache.hadoop.ipc.Client$Connection.setupiOStreams(Client.java:560)

at org.apache.hadoop.ipc.Client$Connection.access$2000(Client.java:184)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1206)

at org.apache.hadoop.ipc.Client.call(Client.java:1050)

... 18 more

2014-09-22 19:56:18,228 INFO org.apache.hadoop.hbase.master.HMaster: Aborting

2014-09-22 19:56:18,228 DEBUG org.apache.hadoop.hbase.master.HMaster: Stopping service threads

2014-09-22 19:56:18,228 INFO org.apache.hadoop.ipc.HBaseServer: Stopping server on 60000

2014-09-22 19:56:18,231 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 0 on 60000: exiting

2014-09-22 19:56:18,231 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 1 on 60000: exiting

2014-09-22 19:56:18,231 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 2 on 60000: exiting

2014-09-22 19:56:18,232 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 3 on 60000: exiting

2014-09-22 19:56:18,232 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 4 on 60000: exiting

2014-09-22 19:56:18,232 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 5 on 60000: exiting

2014-09-22 19:56:18,233 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 6 on 60000: exiting

2014-09-22 19:56:18,233 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 7 on 60000: exiting

2014-09-22 19:56:18,233 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 8 on 60000: exiting

2014-09-22 19:56:18,234 INFO org.apache.hadoop.ipc.HBaseServer: IPC Server handler 9 on 60000: exiting

2014-09-22 19:56:18,234 INFO org.apache.hadoop.ipc.HBaseServer: REPL IPC Server handler 0 on 60000: exiting

2014-09-22 19:56:18,234 INFO org.apache.hadoop.ipc.HBaseServer: REPL IPC Server handler 1 on 60000: exiting

2014-09-22 19:56:18,234 INFO org.apache.hadoop.ipc.HBaseServer: REPL IPC Server handler 2 on 60000: exiting

2014-09-22 19:56:18,235 INFO org.apache.hadoop.ipc.HBaseServer: Stopping IPC Server listener on 60000

2014-09-22 19:56:18,240 INFO org.apache.hadoop.ipc.HBaseServer: Stopping IPC Server Responder

2014-09-22 19:56:18,240 INFO org.apache.hadoop.ipc.HBaseServer: Stopping IPC Server Responder

2014-09-22 19:56:18,241 INFO org.apache.hadoop.hbase.master.HMaster: Stopping infoServer

2014-09-22 19:56:18,247 INFO org.mortbay.log: Stopped SelectChannelConnector@0.0.0.0:60010

2014-09-22 19:56:18,278 INFO org.apache.zookeeper.ClientCnxn: EventThread shut down

2014-09-22 19:56:18,278 INFO org.apache.zookeeper.ZooKeeper: Session: 0x1489d3757740000 closed

2014-09-22 19:56:18,278 INFO org.apache.hadoop.hbase.master.HMaster: HMaster main thread exiting

2014-09-22 19:56:18,278 ERROR org.apache.hadoop.hbase.master.HMasterCommandLine: Failed to start master

java.lang.RuntimeException: HMaster Aborted

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:160)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:104)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:76)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:2129)

2014-09-22 20:35:55,320 INFO org.mortbay.log: jetty-6.1.26

2014-09-22 20:35:57,557 INFO org.mortbay.log: Started SelectChannelConnector@0.0.0.0:60010

2014-09-22 20:35:57,645 INFO org.apache.hadoop.hbase.master.ActiveMasterManager: Deleting Znode for /hbase/backup-masters/nn,60000,1

411389351537 from backup master directory

2014-09-22 20:35:58,013 WARN org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper: Node /hbase/backup-masters/nn,60000,14113893515

37 already deleted, and this is not a retry

2014-09-22 20:35:58,013 INFO org.apache.hadoop.hbase.master.ActiveMasterManager: Master=nn,60000,1411389351537

2014-09-22 20:36:00,237 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 0 tim

e(s).

2014-09-22 20:36:01,239 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 1 tim

e(s).

2014-09-22 20:36:02,312 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 2 tim

e(s).

2014-09-22 20:36:03,314 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 3 tim

e(s).

2014-09-22 20:36:04,320 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 4 tim

e(s).

2014-09-22 20:36:05,321 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 5 tim

e(s).

2014-09-22 20:36:06,322 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 6 tim

e(s).

2014-09-22 20:36:07,323 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 7 tim

e(s).

2014-09-22 20:36:08,325 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 8 tim

e(s).

2014-09-22 20:36:09,389 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 9 tim

e(s).

2014-09-22 20:36:09,395 FATAL org.apache.hadoop.hbase.master.HMaster: Unhandled exception. Starting shutdown.

java.net.ConnectException: Call to localhost/127.0.0.1:9000 failed on connection exception: java.net.ConnectException: 拒绝连接

at org.apache.hadoop.ipc.Client.wrapException(Client.java:1099)

at org.apache.hadoop.ipc.Client.call(Client.java:1075)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:225)

at com.sun.proxy.$Proxy10.getProtocolVersion(Unknown Source)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:396)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:379)

at org.apache.hadoop.hdfs.DFSClient.createRPCNamenode(DFSClient.java:119)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:238)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:203)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:89)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:1386)

下面是我的配置:

> hbase-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_67/

export HBASE_HOME=/usr/local/hbase-0.96.2-hadoop2

export HADOOP_HOME=/usr/local/hadoop-2.4.1/

export HBASE_MANAGES_ZK=false

> regionservers

[root@nn hbase-0.96.2-hadoop2]# cat conf/regionservers

nn

sn

dn1

> hbase-site.xml

[root@nn hbase-0.96.2-hadoop2]# cat conf/hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

-->

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://nn:9000/tmpdir</value>

<description>The directory shared by RegionServers.</description>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

<description>The replication count for HLog and HFile storage. Should not be greater than HDFS datanode count.</description>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>nn,sn,dn1</value>

</property>

<property>

<name>hbase.zookeeper.property.datadir</name>

<value>/tmpdir/zookeeperdata/</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

<description>Property from ZooKeeper's config zoo.cfg.The port at which the clients will connect.

</description>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.hregion.memstore.flush.size</name>

<value>5242880</value>

</property>

<property>

<name>hbase.hregion.memstore.flush.size</name>

<value>5242880</value>

</property>

<property>

<name>hbase.master</name>

<value>master:60000</value>

</property>

</configuration>

启动并验证:

[root@sn test]# hbase shell

2014-09-26 16:22:26,178 INFO [main] Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 0.96.2-hadoop2, r1581096, Mon Mar 24 16:03:18 PDT 2014

hbase(main):001:0> list

TABLE

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hbase-0.96.2-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.4.1/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

0 row(s) in 6.7970 seconds

=> []

hbase(main):002:0> create

create create_namespace

hbase(main):002:0> create 'test','stu_id','stu_xx'

0 row(s) in 18.0590 seconds

=> Hbase::Table - test

hbase(main):003:0> put 'test','1','stu_id:name','jaybing'

0 row(s) in 3.2750 seconds

hbase(main):004:0> scan 'test'

ROW COLUMN+CELL

1 column=stu_id:name, timestamp=1411720116252, value=jaybing

1 row(s) in 0.2280 seconds

hbase(main):005:0> list

TABLE

test

1 row(s) in 0.2460 seconds

=> ["test"]

hbase(main):006:0>

--结束END--

本文标题: hadoop2.4.1结合hbase0.96.2

本文链接: https://lsjlt.com/news/37487.html(转载时请注明来源链接)

有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341

2024-10-23

2024-10-22

2024-10-22

2024-10-22

2024-10-22

2024-10-22

2024-10-22

2024-10-22

2024-10-22

2024-10-22

回答

回答

回答

回答

回答

回答

回答

回答

回答

回答

0