一、系统环境介绍 PC环境: ubuntu18.04 Android版本: 8.1 Android设备: 友善之臂 RK3399 开发板 摄像头: 罗技USB摄像头 FFmp

一、系统环境介绍

PC环境: ubuntu18.04

Android版本: 8.1

Android设备: 友善之臂 RK3399 开发板

摄像头: 罗技USB摄像头

FFmpeg版本: 4.2.2

NDK版本: R19C

Qt版本: 5.12

二、QT代码

关于FFMPEG库的编译、QT的环境搭建等问题,可以看上几篇文章。

代码说明:

目前代码没有整理,视频录制、拍照、推流等功能都是放在一个.cpp文件里。

代码里暂时没有加多线程,3个功能通过界面上的3个按钮实现调用。

录制视频时,因为没加多线程代码,录制视频的时间在代码里写死了,录制过程中GUI界面是没法响应的。

推流视频同上。

直接上QT的核心代码:整个mainwindow.cpp代码

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include

#include

void MainWindow::SetStyle(const QString &qssFile)

{

QFile file(qssFile);

if (file.open(QFile::ReadOnly)) {

QString qss = QLatin1String(file.readAll());

qApp->setStyleSheet(qss);

QString PaletteColor = qss.mid(20,7);

qApp->setPalette(QPalette(QColor(PaletteColor)));

file.close();

}

else

{

qApp->setStyleSheet("");

}

}

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

this->SetStyle(":/images/blue.CSS" ); //设置样式表

this->setWindowIcon(QIcon(":/images/log.ico")); //设置图标

this->setWindowTitle("FFMPEG测试DEMO");

RefreshCameraList(); //刷新摄像头列表

//查看摄像头的权限

QProcess process;

QByteArray output;

QString str_output;

process.setProcessChannelMode(QProcess::MergedChannels);

process.start("ls /dev/video0 -l");

process.waitForFinished();

output = process.readAllStandardOutput();

str_output = output;

log_Display(str_output);

}

//日志显示

void MainWindow::log_Display(QString text)

{

ui->plainTextEdit_CameraopenInfo->insertPlainText(text);

}

MainWindow::~MainWindow()

{

delete ui;

}

void MainWindow::get_ffmpeg_version_info()

{

QProcess process;

process.start("pwd");

process.waitForFinished();

QByteArray output = process.readAllStandardOutput();

QString str_output = output;

log_Display("当前APP的工作路径:"+str_output);

log_Display(tr("FFMPEG的版本号:%1\n").arg(av_version_info()));

av_reGISter_all();

AVCodec *c_temp = av_codec_next(nullptr);

QString info="FFMPEG支持的解码器:\n";

while (c_temp != nullptr)

{

if (c_temp->decode != nullptr)

{

info+="[Decode]";

}

else

{

info+="[Encode]";

}

switch (c_temp->type)

{

case AVMEDIA_TYPE_VIDEO:

info+="[Video]";

break;

case AVMEDIA_TYPE_AUDIO:

info+="[Audeo]";

break;

default:

info+="[Other]";

break;

}

info+=c_temp->name;

info+=tr(" ID=%1").arg(c_temp->id);

info+="\n";

c_temp = c_temp->next;

}

log_Display(info);

}

#define STREAM_DURATION 10.0

#define STREAM_FRAME_RATE 10

#define STREAM_PIX_FMT AV_PIX_FMT_YUV420P

#define SCALE_FLAGS SWS_BICUBIC

//存放视频的宽度和高度

int video_width;

int video_height;

// 单个输出AVStream的包装器

typedef struct OutputStream

{

AVStream *st;

AVCodecContext *enc;

int64_t next_pts;

int samples_count;

AVFrame *frame;

AVFrame *tmp_frame;

float t, tincr, tincr2;

struct SwsContext *sws_ctx;

struct SwrContext *swr_ctx;

}OutputStream;

typedef struct IntputDev

{

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFORMatContext *v_ifmtCtx;

int videoindex;

struct SwsContext *img_convert_ctx;

AVPacket *in_packet;

AVFrame *pFrame,*pFrameYUV;

}IntputDev;

static void log_packet(const AVFormatContext *fmt_ctx, const AVPacket *pkt)

{

AVRational *time_base = &fmt_ctx->streams[pkt->stream_index]->time_base;

printf("pts:%s pts_time:%s dts:%s dts_time:%s duration:%s duration_time:%s stream_index:%d\n",

av_ts2str(pkt->pts), av_ts2timestr(pkt->pts, time_base),

av_ts2str(pkt->dts), av_ts2timestr(pkt->dts, time_base),

av_ts2str(pkt->duration), av_ts2timestr(pkt->duration, time_base),

pkt->stream_index);

}

static int write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base, AVStream *st, AVPacket *pkt)

{

av_packet_rescale_ts(pkt, *time_base, st->time_base);

pkt->stream_index = st->index;

log_packet(fmt_ctx, pkt);

return av_interleaved_write_frame(fmt_ctx, pkt);

}

static void add_stream(OutputStream *ost, AVFormatContext *oc,AVCodec **codec,enum AVCodecID codec_id)

{

AVCodecContext *c;

int i;

*codec = avcodec_find_encoder(codec_id);

if (!(*codec))

{

qDebug("Could not find encoder for '%s'\n",

avcodec_get_name(codec_id));

return;

}

ost->st = avformat_new_stream(oc, NULL);

if (!ost->st) {

qDebug("Could not allocate stream\n");

return;

}

ost->st->id = oc->nb_streams-1;

c = avcodec_alloc_context3(*codec);

if (!c) {

qDebug("Could not alloc an encoding context\n");

return;

}

ost->enc = c;

switch((*codec)->type)

{

case AVMEDIA_TYPE_AUDIO:

c->sample_fmt = (*codec)->sample_fmts ?

(*codec)->sample_fmts[0] : AV_SAMPLE_FMT_FLTP;

c->bit_rate = 64000;

c->sample_rate = 44100;

if ((*codec)->supported_samplerates) {

c->sample_rate = (*codec)->supported_samplerates[0];

for (i = 0; (*codec)->supported_samplerates[i]; i++) {

if ((*codec)->supported_samplerates[i] == 44100)

c->sample_rate = 44100;

}

}

c->channels = av_get_channel_layout_nb_channels(c->channel_layout);

c->channel_layout = AV_CH_LAYOUT_STEREO;

if ((*codec)->channel_layouts) {

c->channel_layout = (*codec)->channel_layouts[0];

for (i = 0; (*codec)->channel_layouts[i]; i++) {

if ((*codec)->channel_layouts[i] == AV_CH_LAYOUT_STEREO)

c->channel_layout = AV_CH_LAYOUT_STEREO;

}

}

c->channels = av_get_channel_layout_nb_channels(c->channel_layout);

ost->st->time_base = (AVRational){ 1, c->sample_rate };

break;

case AVMEDIA_TYPE_VIDEO:

c->codec_id = codec_id;

c->bit_rate = 2500000; //平均比特率,例子代码默认值是400000

c->width=video_width;

c->height=video_height;

ost->st->time_base = (AVRational){1,STREAM_FRAME_RATE}; //帧率设置

c->time_base = ost->st->time_base;

c->Gop_size = 12;

c->pix_fmt = STREAM_PIX_FMT;

if(c->codec_id == AV_CODEC_ID_MPEG2VIDEO)

{

c->max_b_frames = 2;

}

if(c->codec_id == AV_CODEC_ID_MPEG1VIDEO)

{

c->mb_decision = 2;

}

break;

default:

break;

}

if (oc->oformat->flags & AVFMT_GLOBALHEADER)

c->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

static AVFrame *alloc_picture(enum AVPixelFormat pix_fmt, int width, int height)

{

AVFrame *picture;

int ret;

picture = av_frame_alloc();

if (!picture)

return nullptr;

picture->format = pix_fmt;

picture->width = width;

picture->height = height;

ret = av_frame_get_buffer(picture, 32);

if(retenc;

AVDictionary *opt = nullptr;

av_dict_copy(&opt, opt_arg, 0);

ret = avcodec_open2(c, codec, &opt);

av_dict_free(&opt);

if (ret frame = alloc_picture(c->pix_fmt, c->width, c->height);

if (!ost->frame)

{

qDebug("Could not allocate video frame\n");

return;

}

qDebug("ost->frame alloc success fmt=%d w=%d h=%d\n",c->pix_fmt,c->width, c->height);

ost->tmp_frame = nullptr;

if(c->pix_fmt != AV_PIX_FMT_YUV420P)

{

ost->tmp_frame = alloc_picture(AV_PIX_FMT_YUV420P, c->width, c->height);

if (!ost->tmp_frame)

{

qDebug("Could not allocate temporary picture\n");

exit(1);

}

}

ret=avcodec_parameters_from_context(ost->st->codecpar, c);

if(retenc;

av_init_packet(&pkt);

ret = avcodec_encode_video2(c, &pkt, frame, &got_packet);

if(retst->time_base.num,ost->st->time_base.den,

c->time_base.num,c->time_base.den);

if(got_packet)

{

ret = write_frame(oc, &c->time_base, ost->st, &pkt);

}else

{

ret = 0;

}

if(retenc;

AVFrame * ret_frame=nullptr;

if(av_compare_ts(ost->next_pts, c->time_base,STREAM_DURATION, (AVRational){1,1})>=0)

return nullptr;

if(av_frame_make_writable(ost->frame)v_ifmtCtx, input->in_packet)>=0)

{

if(input->in_packet->stream_index==input->videoindex)

{

ret = avcodec_decode_video2(input->pCodecCtx, input->pFrame, &got_picture, input->in_packet);

*got_pic=got_picture;

if(retin_packet);

return nullptr;

}

if(got_picture)

{

sws_scale(input->img_convert_ctx, (const unsigned char* const*)input->pFrame->data, input->pFrame->linesize, 0, input->pCodecCtx->height, ost->frame->data, ost->frame->linesize);

ost->frame->pts =ost->next_pts++;

ret_frame= ost->frame;

}

}

av_packet_unref(input->in_packet);

}

return ret_frame;

}

static void close_stream(AVFormatContext *oc, OutputStream *ost)

{

avcodec_free_context(&ost->enc);

av_frame_free(&ost->frame);

av_frame_free(&ost->tmp_frame);

sws_freeContext(ost->sws_ctx);

swr_free(&ost->swr_ctx);

}

int MainWindow::FFMPEG_SaveVideo()

{

OutputStream video_st = { 0 }, audio_st = {0};

AVOutputFormat *fmt;

AVFormatContext *oc;

AVCodec *audio_codec, *video_codec;

int ret;

int have_video = 0, have_audio = 0;

int encode_video = 0, encode_audio = 0;

AVDictionary *opt = NULL;

int i;

av_register_all();

avcodec_register_all();

avdevice_register_all(); //注册多媒体设备交互的类库

QDateTime dateTime(QDateTime::currentDateTime());

//时间效果: 2020-03-05 16:25::04 周四

QString qStr="/sdcard/Movies/"; //手机上存放电影的目录

qStr+=dateTime.toString("yyyy-MM-dd-hh-mm-ss");

qStr+=".mp4";

char filename[50];

strcpy(filename,qStr.toLatin1().data());

// filename=qStr.toLatin1().data(); //"/sdcard/DCIM/Camera/20200309104704.mp4"; //qStr.toLatin1().data();

qDebug()<<"qStr.toLatin1().data()="<<qStr.toLatin1().data();

qDebug()<<"filename="<<filename;

qDebug()<<"size="<<qStr.toLatin1().size(); //sdcard/Movies/2020-03-09-11-08-08.mp4

log_Display(tr("当前存储的视频文件名称:%1\n").arg(filename));

avformat_alloc_output_context2(&oc,nullptr,nullptr,filename);

if(!oc)

{

log_Display("无法从文件扩展名推断出输出格式:使用MPEG。\n");

avformat_alloc_output_context2(&oc,nullptr,"mpeg",filename);

}

if(!oc)return 1;

//添加摄像头----------------------------------

IntputDev video_input={0};

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFormatContext *v_ifmtCtx;

avdevice_register_all();

v_ifmtCtx = avformat_alloc_context();

//linux下指定摄像头信息

AVInputFormat *ifmt=av_find_input_format("video4linux2");

if(avformat_open_input(&v_ifmtCtx,"/dev/video0",ifmt,NULL)!=0)

{

log_Display("无法打开输入流./dev/video0\n");

return -1;

}

if(avformat_find_stream_info(v_ifmtCtx,nullptr)<0)

{

log_Display("找不到流信息.\n");

return -1;

}

int videoindex=-1;

for(i=0; inb_streams; i++)

if(v_ifmtCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

{

videoindex=i;

log_Display(tr("videoindex=%1\n").arg(videoindex));

break;

}

if(videoindex==-1)

{

log_Display("找不到视频流。\n");

return -1;

}

pCodecCtx=v_ifmtCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==nullptr)

{

log_Display("找不到编解码器。\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)width, pCodecCtx->height,16));

av_image_fill_arrays(pFrameYUV->data,pFrameYUV->linesize, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,16);

log_Display(tr("摄像头尺寸(WxH): %1 x %2 \n").arg(pCodecCtx->width).arg(pCodecCtx->height));

video_width=pCodecCtx->width;

video_height=pCodecCtx->height;

struct SwsContext *img_convert_ctx;

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

AVPacket *in_packet=(AVPacket *)av_malloc(sizeof(AVPacket));

video_input.img_convert_ctx=img_convert_ctx;

video_input.in_packet=in_packet;

video_input.pCodecCtx=pCodecCtx;

video_input.pCodec=pCodec;

video_input.v_ifmtCtx=v_ifmtCtx;

video_input.videoindex=videoindex;

video_input.pFrame=pFrame;

video_input.pFrameYUV=pFrameYUV;

//-----------------------------添加摄像头结束

fmt=oc->oformat;

qDebug("fmt->video_codec = %d\n", fmt->video_codec);

if(fmt->video_codec != AV_CODEC_ID_NONE)

{

add_stream(&video_st,oc,&video_codec,fmt->video_codec);

have_video=1;

encode_video=1;

}

if(have_video)open_video(oc, video_codec, &video_st, opt);

av_dump_format(oc,0,filename,1);

if(!(fmt->flags & AVFMT_NOFILE))

{

ret=avio_open(&oc->pb,filename,AVIO_FLAG_WRITE);

if(ret<0)

{

log_Display(tr("打不开%1: %2\n").arg(filename).arg(av_err2str(ret)));

return 1;

}

}

ret=avformat_write_header(oc, &opt);

if(ret<0)

{

log_Display(tr("打开输出文件时发生错误: %1\n").arg(av_err2str(ret)));

return 1;

}

int got_pic;

while(encode_video)

{

AVFrame *frame=get_video_frame(&video_st,&video_input,&got_pic);

if(!got_pic)

{

QThread::msleep(10);

qDebug()<<"msleep(10);\n";

continue;

}

encode_video=!write_video_frame(oc,&video_st,frame);

}

qDebug()<<"结束录制\n";

av_write_trailer(oc);

qDebug()<<"准备释放空间.\n";

sws_freeContext(video_input.img_convert_ctx);

avcodec_close(video_input.pCodecCtx);

av_free(video_input.pFrameYUV);

av_free(video_input.pFrame);

avformat_close_input(&video_input.v_ifmtCtx);

qDebug()<<"关闭每个编解码器.\n";

if (have_video)close_stream(oc, &video_st);

qDebug()<flags & AVFMT_NOFILE))avio_closep(&oc->pb);

qDebug()<<"释放流.\n";

avformat_free_context(oc);

return 0;

}

int MainWindow::FFMPEG_Init_Config(const char *video,const char *size)

{

AVInputFormat *ifmt;

AVFormatContext *pFormatCtx;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVDictionary *options=nullptr;

AVPacket *packet;

AVFrame *pFrame,*pFrameYUV;

int videoindex;

int i,ret,got_picture;

av_register_all();

avcodec_register_all();

avdevice_register_all(); //注册多媒体设备交互的类库

ifmt=av_find_input_format("video4linux2");

pFormatCtx=avformat_alloc_context();

av_dict_set(&options,"video_size",size,0); //设置摄像头输出的分辨率

//av_dict_set(&options,"framerate","30",0); //设置摄像头帧率. 每秒为单位,这里设置每秒30帧.

//一般帧率不用设置,默认为最高,帧率和输出的图像尺寸有关系

if(avformat_open_input(&pFormatCtx,video,ifmt,&options)!=0)

{

log_Display(tr("输入设备打开失败: %1\n").arg(video));

return -1;

}

if(avformat_find_stream_info(pFormatCtx,nullptr)<0)

{

log_Display("查找输入流失败.\n");

return -2;

}

videoindex=-1;

for(i=0;inb_streams;i++)

{

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

{

videoindex=i;

break;

}

}

if(videoindex==-1)

{

log_Display("视频流查找失败.\n");

return -3;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

log_Display(tr("摄像头尺寸(WxH): %1 x %2 \n").arg(pCodecCtx->width).arg(pCodecCtx->height));

log_Display(tr("codec_id=%1").arg(pCodecCtx->codec_id));

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==nullptr)

{

log_Display("找不到编解码器.\n");

return -4;

}

if(avcodec_open2(pCodecCtx, pCodec,nullptr)width, pCodecCtx->height,16)); // avpicture_get_size

av_image_fill_arrays(pFrameYUV->data,pFrameYUV->linesize,out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,16);

struct SwsContext *img_convert_ctx;

img_convert_ctx=sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

//读取一帧数据

if(av_read_frame(pFormatCtx, packet)>=0)

{

//输出图像的大小

log_Display(tr("数据大小=%1\n").arg(packet->size));

//判断是否是视频流

if(packet->stream_index==videoindex)

{

//解码从摄像头获取的数据,pframe结构

ret=avcodec_decode_video2(pCodecCtx, pFrame,&got_picture,packet);

if(retwidth*pCodecCtx->height;

sws_scale(img_convert_ctx,(const unsigned char* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize); //根据前面配置的缩放参数,进行图像格式转换以及缩放等操作

unsigned char *p=new unsigned char[y_size]; //申请空间

unsigned char *rgb24_p=new unsigned char[pCodecCtx->width*pCodecCtx->height*3];

//将YUV数据拷贝到缓冲区

memcpy(p,pFrameYUV->data[0],y_size);

memcpy(p+y_size,pFrameYUV->data[1],y_size/4);

memcpy(p+y_size+y_size/4,pFrameYUV->data[2],y_size/4);

//将YUV数据转为RGB格式plainTextEdit_FFMPEG_info_Display

YUV420P_to_RGB24(p,rgb24_p,pCodecCtx->width,pCodecCtx->height);

//加载到QIMAGE显示到QT控件

QImage image(rgb24_p,pCodecCtx->width,pCodecCtx->height,QImage::Format_RGB888);

QPixmap my_pixmap;

my_pixmap.convertFromImage(image);

ui->label_ImageDisplay->setPixmap(my_pixmap);

delete[] p; //释放空间

delete[] rgb24_p; //释放空间

}

}

}

}

avcodec_close(pCodecCtx); //关闭编码器

avformat_close_input(&pFormatCtx); //关闭输入设备

sws_freeContext(img_convert_ctx);

av_free(out_buffer);

av_free(pFrameYUV);

return 0;

}

void MainWindow::YUV420P_to_RGB24(unsigned char *data, unsigned char *rgb, int width, int height)

{

int index = 0;

unsigned char *ybase = data;

unsigned char *ubase = &data[width * height];

unsigned char *vbase = &data[width * height * 5 / 4];

for (int y = 0; y < height; y++) {

for (int x = 0; x comboBox_camera_number->currentText().isEmpty())

{

ui->plainTextEdit_CameraOpenInfo->insertPlainText("未选择摄像头.\n");

return;

}

FFMPEG_Init_Config(ui->comboBox_camera_number->currentText().toLatin1().data(),

ui->comboBox_CamearSize->currentText().toLatin1().data());

#endif

}

void MainWindow::on_pushButton_getInfo_clicked()

{

get_ffmpeg_version_info();

}

void MainWindow::on_pushButton_RefreshCamear_clicked()

{

RefreshCameraList();

}

//刷新摄像头;列表

void MainWindow::RefreshCameraList(void)

{

QDir dir("/dev"); //构造目录

QStringList infolist = dir.entryList(QDir::System);

ui->comboBox_camera_number->clear();

for(int i=0; icomboBox_camera_number->addItem("/dev/"+infolist.at(i));

}

}

}

void MainWindow::on_pushButton_clicked()

{

FFMPEG_SaveVideo();

}

//推流视频

void MainWindow::on_pushButton_PushVideo_clicked()

{

QString filename=QFileDialog::getOpenFileName(this,"选择打开的文件","/sdcard/Movies/",tr("*.*"));

FFMPEG_PushVideo(filename.toLatin1().data(),"rtmp://js.live-send.acg.tv/live-js/?streamname=live_68130189_71037877&key=b95d4cfda0c196518f104839fe5e7573");

}

//推流视频

int MainWindow::FFMPEG_PushVideo(char *video_file,char *url)

{

AVFormatContext *pInFmtContext = NULL;

AVStream *in_stream;

AVCodecContext *pInCodecCtx;

AVCodec *pInCodec;

AVPacket *in_packet;

AVFormatContext * pOutFmtContext;

AVOutputFormat *outputFmt;

AVStream * out_stream;

AVRational frame_rate;

double duration;

int ret;

char in_file[128] = {0};

char out_file[256] = {0};

int videoindex = -1;

int audioindex = -1;

int video_frame_count = 0;

int audio_frame_count = 0;

int video_frame_size = 0;

int audio_frame_size = 0;

int i;

int got_picture;

memcpy(in_file, video_file, strlen(video_file));

memcpy(out_file, url, strlen(url));

qDebug()<<"in_file="<<in_file;

qDebug()<<"out_file="<<out_file;

if(avformat_open_input ( &pInFmtContext, in_file, NULL, NULL) < 0)

{

qDebug("avformat_open_input failed\n");

return -1;

}

//查询输入流中的所有流信息

if(avformat_find_stream_info(pInFmtContext, NULL) < 0)

{

qDebug("avformat_find_stream_info failed\n");

return -1;

}

//print

av_dump_format(pInFmtContext, 0, in_file, 0);

ret = avformat_alloc_output_context2(&pOutFmtContext, NULL, "flv", out_file);

if(ret < 0)

{

qDebug("avformat_alloc_output_context2 failed\n");

return -1;

}

for(i=0; i nb_streams; i++)

{

in_stream = pInFmtContext->streams[i];

if( in_stream->codecpar->codec_type == AVMEDIA_TYPE_AUDIO)

{

audioindex = i;

}

if( in_stream->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

videoindex = i;

frame_rate = av_guess_frame_rate(pInFmtContext, in_stream, NULL);

qDebug("video: frame_rate:%d/%d\n", frame_rate.num, frame_rate.den);

qDebug("video: frame_rate:%d/%d\n", frame_rate.den, frame_rate.num);

duration = av_q2d((AVRational){frame_rate.den, frame_rate.num});

}

pInCodec = avcodec_find_decoder(in_stream->codecpar->codec_id);

qDebug("%x, %d\n", pInCodec, in_stream->codecpar->codec_id);

out_stream = avformat_new_stream(pOutFmtContext, pInCodec);//in_stram->codec->codec);

if(out_stream == NULL)

{

qDebug("avformat_new_stream failed:%d\n",i);

}

ret = avcodec_parameters_copy(out_stream->codecpar, in_stream->codecpar);

if( ret codecpar->codec_tag = 0;

if(pOutFmtContext->oformat->flags & AVFMT_GLOBALHEADER)

{//AVFMT_GLOBALHEADER 代表封装格式包含“全局头”(即整个文件的文件头),大部分封装格式是这样的。一些封装格式没有“全局头”,比如 MPEG2TS

out_stream->codec->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

}

av_dump_format(pOutFmtContext,0,out_file,1);

ret=avio_open(&pOutFmtContext->pb, out_file, AVIO_FLAG_WRITE);

if(ret<0)

{

qDebug("avio_open failed:%d\n", ret);

return -1;

}

int64_t start_time = av_gettime();

ret = avformat_write_header(pOutFmtContext, NULL);

in_packet = av_packet_alloc();

while(1)

{

ret=av_read_frame(pInFmtContext, in_packet);

if(retstreams[in_packet->stream_index];

if(in_packet->stream_index == videoindex)

{

video_frame_size += in_packet->size;

qDebug("recv %5d video frame %5d-%5d\n", ++video_frame_count, in_packet->size, video_frame_size);

}

if(in_packet->stream_index == audioindex)

{

audio_frame_size += in_packet->size;

qDebug("recv %5d audio frame %5d-%5d\n", ++audio_frame_count, in_packet->size, audio_frame_size);

}

int codec_type = in_stream->codecpar->codec_type;

if(codec_type == AVMEDIA_TYPE_VIDEO)

{

//根据 pts 时间与系统时间的关系来计算延时时间,该方案更优

AVRational dst_time_base = {1, AV_TIME_BASE};

int64_t pts_time=av_rescale_q(in_packet->pts,in_stream->time_base, dst_time_base);

int64_t now_time=av_gettime() - start_time;

if(pts_time > now_time)av_usleep(pts_time - now_time);

}

out_stream = pOutFmtContext->streams[in_packet->stream_index];

av_packet_rescale_ts(in_packet,in_stream->time_base, out_stream->time_base);

in_packet->pos = -1;

ret = av_interleaved_write_frame(pOutFmtContext, in_packet);

if(retpb);

avformat_free_context(pOutFmtContext);

return 0;

}

关于摄像头的访问权限、ADB命令使用、Android权限问题看这篇文章:

https://blog.csdn.net/xiaolong1126626497/article/details/104749999

本代码里: 推流功能需要使用网络、视频录制功能需要操作SD卡文件,所以需要在AndroidManifest.xml文件里增加一些权限。

关于QTonAndroid开发的一些技术问题,这里就不再探讨,后续有机会再更新。

在AndroidManifest.xml文件增加的权限如下:

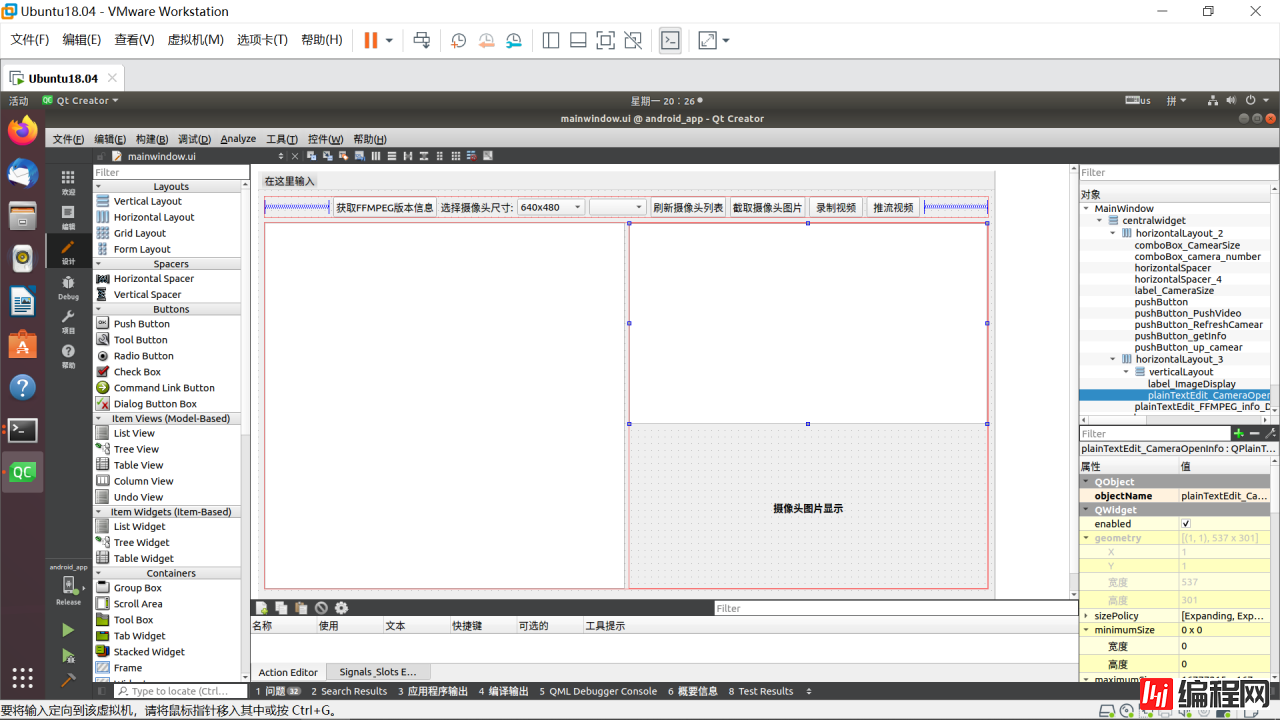

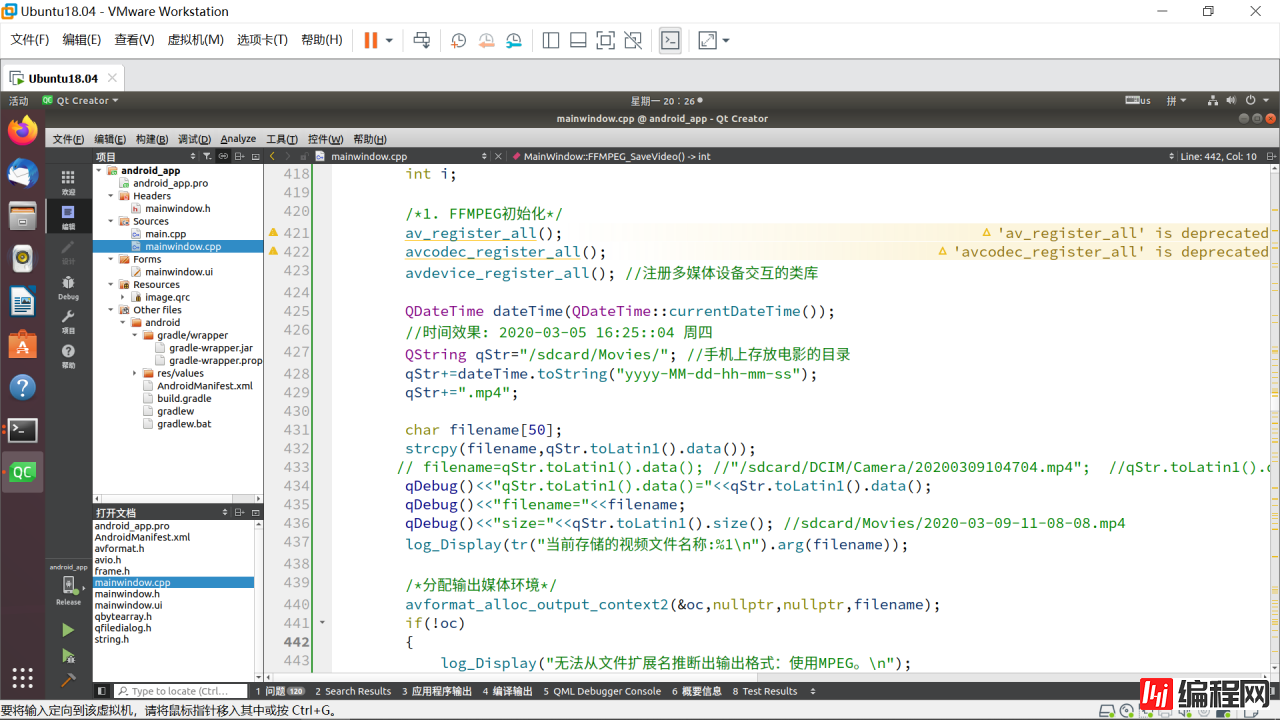

QT工程的截图:

这张图是QT工程的结构:

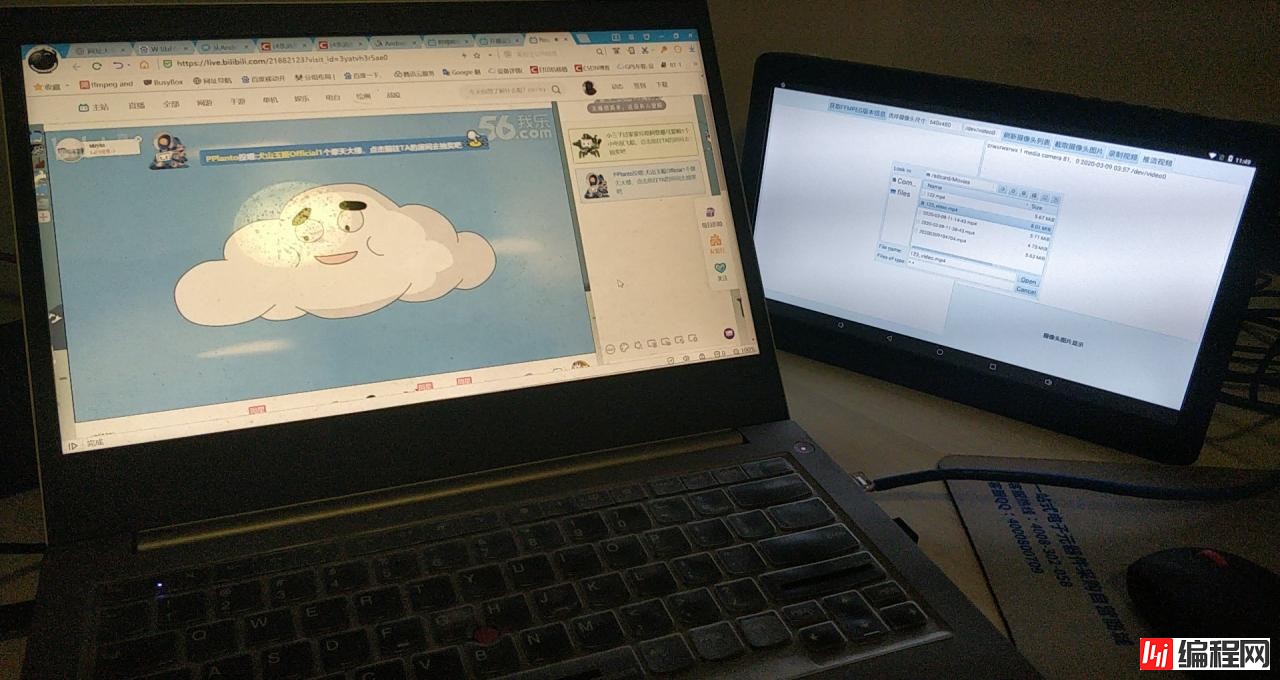

这张图是推流视频到B站的效果: (我这里的推流视频是使用adb提前拷贝到指定目录下的 adb push 123.mp4 /system/xxx)

这张图是拍照显示的效果:

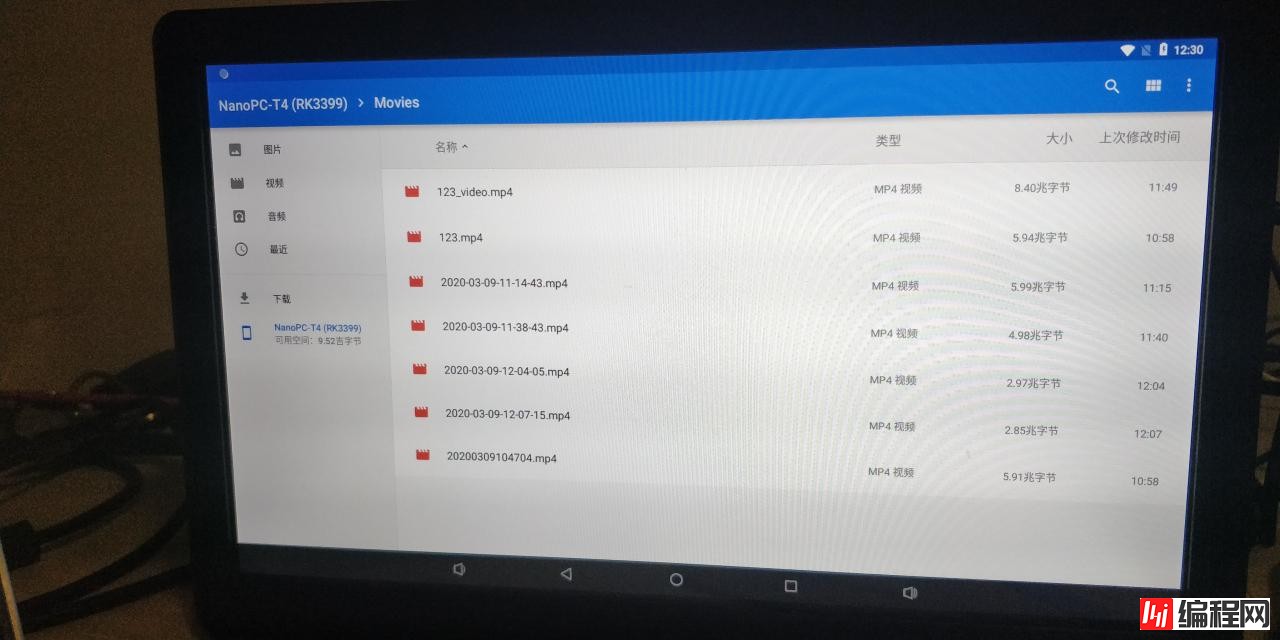

这张图片是录制视频存放到本地的效果:

--结束END--

本文标题: QT在Android设备上实现FFMPEG开发: 完成拍照、MP4视频录制、rtsp推流

本文链接: https://lsjlt.com/news/29082.html(转载时请注明来源链接)

有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341

2024-01-21

2023-10-28

2023-10-28

2023-10-27

2023-10-27

2023-10-27

2023-10-27

回答

回答

回答

回答

回答

回答

回答

回答

回答

回答

0